*University of Oxford email: alberto.giubilini@philosophy.ox.ac.uk

**Arizona State University email: rgurarie@asu.edu

***University of Oxford email: euzebiusz.jamrozik@ethox.ox.ac.uk

We discuss the relationship between expertise, expert authority, and trust in the case of vaccine research and policy, with a particular focus on COVID-19 vaccines. We argue that expert authority is not merely an epistemic notion, but entails being trusted by the relevant public and is valuable if it is accompanied by expert trustworthiness. Trustworthiness requires, among other things, being transparent, acknowledging uncertainty and expert disagreement (e.g., around vaccines’ effectiveness and safety), being willing to revise views in response to new evidence, and being clear about the values that underpin expert recommendations. We explore how failure to acknowledge expert disagreement and uncertainty can undermine trust in vaccination and public health experts, using expert recommendations around COVID-19 vaccines as a case study.

Keywords: trust, expertise, vaccination, covid-19, transparency, uncertainty

In September 2021, the Joint Committee on Vaccination and Immunisation (JCVI) advising the UK Government decided to not recommend COVID-19 vaccines for children aged 12–15. As they stated,

There is evidence of an association between mRNA COVID-19 vaccines and myocarditis. [...] There is considerable uncertainty regarding the magnitude of the potential harms. The margin of benefit, based primarily on a health perspective, is considered too small to support advice on a universal programme of vaccination of otherwise healthy 12 to 15-year-old children at this time.1

A few days later, the UK Government decided to authorize mRNA COVID-19 vaccines for 12–15-year-old children. Did the Government give up on “follow the science,” the principle that was said to have informed its pandemic policy until then? Not quite. First, science experts were divided on the issue. Some experts, including officials at the US Centers for Disease Control and Prevention, were recommending COVID-19 vaccines for 12–15-year-old children for their medical benefits.2 Some experts were not. Second, the JCVI’s recommendation only concerned the medical aspect of the issue, that is whether the risks of side-effects outweighed the individual benefits in terms of protection from the risks of COVID-19.

The Government’s decision was based on a broader range of considerations, such as the potential for disruptions in school attendance and mental health costs due to restrictions that vaccinating children could have averted.3 Ultimately, the Government concluded that uncertainty around the medical benefits of vaccination was not so large as to prevent authorizing – and indeed, strongly recommending – child COVID-19 vaccination. This decision was political. It was based on a value judgment about what counted as “enough” certainty in the level of safety and effectiveness of vaccines and on political choices regarding the conditions for the relaxation of harmful restrictive policies for children (e.g., school disruptions).

Some vaccine experts were taking a cautious approach and acknowledging uncertainty. Others were more confident about the COVID-19 vaccines’ overall net medical benefit to children. Yet other types of experts were considering the wide array of children’s interests (e.g., school attendance) outside of solely preventing COVID-19 infection.

This article is about the trustworthiness of experts in the context of vaccination. We argue that knowledge (e.g., regarding relevant scientific data) is important, but not always necessary for expert authority. In the sphere of public policy especially, but arguably more generally, expertise and expert authority require trust by a relevant public. Expert status and expert authority are not necessarily undermined by lack of knowledge, but they will be undermined by failures of transparency in the acknowledgement of scientific uncertainty, absence of knowledge, and expert disagreement about scientific knowledge. We defend these claims first, and then apply them to both vaccine research and policy, particularly in the context of recent debates regarding COVID-19 vaccines. In this paper, we are not aiming to solve the problem of whom we should trust in cases of expert disagreement. Instead, we argue that experts can improve their trustworthiness among general public when they openly acknowledge two things. First, relevant uncertainties regarding knowledge claims. Second, that disagreement between experts can exist, due in part to uncertainty and in part to different value judgements.

The trust we are concerned with is a form of reliance on someone else, an expert, considered to possess knowledge or skills that are relevant to specific goals in which we have some stakes – often (but not necessarily), skills and knowledge that we don’t possess.4 That is, we refer to trust in an epistemological sense. One can also trust someone for ethical reasons, for instance when you trust someone’s good intentions, or commitments to some ethical principle, or capacity for moral judgements. The two types are often related. On some views, trust in someone is always also trust in some moral skills, such as being honest, or caring about others, or having “goodwill,”5 or, we might add, someone’s professionalism. Even if one rejects such general accounts of trust, it is more difficult to reject the idea that trust in someone’s expertise specifically does involve a moral component. Having trust in someone’s expertise typically requires trusting their commitment to relevant ethical principles which are essential both for acquiring knowledge and for putting knowledge into practice. These include, for example, humility to acknowledge the limitations of their knowledge and the boundaries of their areas of expertise; being honest and transparent in acknowledging uncertainty and disagreement; or being committed to resolving conflicts of interest by prioritizing professional obligations over personal benefits. Guidelines for epidemiologists and public health policymakers often recognize the importance of trust and the key role played by ethical values in fostering public trust. For example, the American College of Epidemiology states in its Ethics Guidelines that “[t]rust is an expression of faith and confidence that epidemiologists will be fair, reliable, ethical, competent, and nonthreatening.”6

Because trust is a form of reliance on others’ skills, knowledge, and moral traits, trustors are dependent, for certain purposes, on those who are trusted. This puts trustors in a position of vulnerability, i.e., susceptible to being wronged or harmed by those who are trusted. Trust is therefore a double-edged sword. When we trust someone, it is because we think or hope this person is trustworthy, although that might not be the case. Experts need to be trusted not just because they are credible, that is, likely to be believed. They also need to be trusted because they are trustworthy, that is, deserving of trust7 – both trust in their epistemic credentials and in the ethical approach to putting them in practice.

In the healthcare context in particular, trust is linked to better healthcare service utilization, adherence to treatment, and self-reported health.8

As we suggest in the next section, the connection between trust and expertise is not only political and ethical – that is, it is not only about the value of trust in expert authority in liberal democracies. It is arguably also a conceptual relationship. We argue that trust is constitutive at least of a certain type of expertise. That is, expertise about matters that affect the interests of the general public or relevant portions of it. One cannot be that type of expert without being trusted as an expert. The very notion of expertise and of expert authority would be undermined by the erosion of trust. Trustworthiness ensures that expertise is a useful, beneficial societal good.

It seems plausible to think that trustworthiness is based, among other things, on transparency regarding epistemic limitations in one’s knowledge as well as value judgments involved in evaluations of evidence. As applied to epistemic conditions, the capacity to recognize uncertainty is itself part of what it means to possess knowledge in a certain domain. Transparency as an ethical requirement therefore arguably entails acknowledging ignorance, uncertainty, and expert disagreement, where relevant. The JCVI’s statement quoted earlier is a good example of how expertise is consistent with acknowledging uncertainty.

There are longstanding debates in philosophy of science regarding whether science is a purely empirical matter or the extent to which it is an activity laden with moral (and other) values.9 When individuals are called on in their public role as experts and therefore their expertise affects the interests of the public, the latter aspect seems predominant: their evaluations of scientific evidence are typically grounded in value judgments regarding whether the level of available evidence or knowledge is enough to warrant a claim or recommendation – be it policy advice or advice on individual choices. This depends, for example, on whether we consider the risks of acting on uncertain data to be worth taking.10 Value judgements of this sort are unavoidable. Trustworthiness requires experts to be transparent about how values contribute to recommendations and to the judgment that a certain level of certainty is high enough, and to avoid claiming that their judgements are “purely” scientific. In the words of Philip Kitcher, “[t]he deeper source of the current erosion of scientific authority consists in insisting on the value-freedom of Genuine Science, while attributing value-judgements to the scientists whose conclusions you want to deny.”11

Do experts need to be right about matters concerning their area of expertise to be legitimately considered experts and granted epistemic authority? The answer must be “no”. Surely, it is conceivable that experts are sometimes wrong, or even that experts are often wrong in certain circumstances. Again, this is a problem that epidemiological institutions sometimes acknowledge. For instance, IEA Guidelines of good practice state that “[e]pidemiologists should be wary of publishing poorly supported conclusions. History shows that many research results are wrong or not fully right, and epidemiology is no exception to this rule.”12

Moreover, there is often uncertainty and/or disagreement among experts for reasons that do not have to do with epistemic failures. An outbreak of a novel virus or the development of a new vaccine technology are examples of such cases. While being wrong or being uncertain does not necessarily mean that someone ceases to be an expert, it might undermine one’s expert authority if not carefully managed. We define expert authority as the extent to which experts are trusted in their field of expertise to provide reliable information. We are not using the concept in a political sense, i.e., to describe the authority that experts are sometimes given in political decision making. We also want to keep it distinct from the notion of epistemic accuracy: the “authority” we talk about often depends on perception of accuracy by a relevant audience, not simply on accuracy itself. Being wrong or uncertain about something might undermine this type of expert authority. An expert might make confident claims about the safety of a new vaccine even while data regarding rare adverse effects are still being collected. In the short term, this might sustain or increase expert authority and trust in vaccines; however, if a new vaccine turns out to be less safe than the expert initially claimed, this might undermine this type of expert authority (and trust in vaccines) in the long term.

Both expertise and expert authority are concepts that need to be unpacked. We start with the latter because, once authority is properly understood, it is easier to see the intimate connection between expertise, expert authority, and trust.

Moti Mizrahi claims that “the mere fact that an expert says that p does not make it significantly more likely that p is true.”13 Mizrahi bases this claim on several empirical considerations about the frequency with which experts in various fields have been proven wrong, and the magnitude of their mistakes. In this way, Mizrahi is not trying to question expertise itself. Instead, he wants to question – or at least to shed some healthy skepticism on – expert authority, understood as being trusted to provide reliable information in one’s field of expertise.

The term “significantly” in Mizrahi’s claim points exactly to the issue of expert authority. Presumably, expert authority requires a minimum level of confidence in the reliability of expert claims, and not just any level that is higher than the mere chance of being right. After all, Mizrahi writes, “Would you trust a watch that gets the time right 55% of the time?”. One way to challenge Mizrahi’s skepticism about expert authority is to provide evidence that experts in any given field have been right significantly more often than, say, 55% of the time.14 We are not addressing this empirical issue here.

Instead, we want to point out that significant disagreement between experts would create a conceptual, and more challenging problem for our notion of expert authority. Suppose two otherwise similar experts (e.g., with similar track records of being correct, similar credentials, etc.) disagree on whether COVID-19 vaccination is, generally speaking, in healthy children’s best medical interests. This is essentially the situation we had when COVID-19 vaccines were authorized for use in adolescents and children. In such cases of disagreement, one might conclude that the two conflicting expert views would, so to speak, cancel each other out – neither group is “significantly” more likely to be right than the other. People would still not know which experts to trust, that is, to which groups one should attribute authority.

Alvin Goldman15 suggests five possible criteria to decide whom to attribute epistemic authority in case of expert disagreement. These are 1) the reasons each party can bring for and against the views at stake; 2) the extent to which other experts agree with either view (a kind of expert majority rule); 3) appraisal by “meta-experts” (e.g., those providing credentials of expertise, such as academic degrees, professional accreditations, meta-researchers, and so on); 4) evidence of any conflict of interests or biases among experts; 5) experts’ past track records.

It is hard to see how any of these could be of much use in the scenario above to any parent who needs to decide which experts to trust. As for criterion 1, Goldman himself acknowledges that non-experts’ capacity to assess conflicting expert arguments is often limited. Parents might be interested in experts’ views on whether the risks of vaccines outweigh their medical benefits because, among other things, parents might not have the time and resources to master statistical methods or search for and assess evidence themselves. If they could use these tools to assess the veracity of experts’ claims, they might be eligible to be experts themselves. As for criteria 3 and 5, experts disagreeing on vaccines’ safety and efficacy may not have significantly different credentials or track records.

Depending on how the relevant expert community is defined, there might well be many experts agreeing with either position, as per criterion 2. However, “majority rules” is a fragile basis for expert authority, as majorities can be formed out of bad incentives. For example, Goldman says, some (putative) expert might belong to some “doctrinal community whose members devoutly and uncritically agree with the opinions of some single leader or leadership cabal.”16 A risk of creating such communities exists in domains characterized by heavy societal polarization (including vaccination policy). A polarized society or scientific community might well create non-financial incentives affecting experts’ claims on either side, although it may be difficult to assess the extent to which such incentives affect the veracity of experts’ claims (criterion 4). Non-financial incentives can be reputational, in terms of opportunities for career progression, or visibility or popularity among certain groups, and so on. The influence of such non-financial interests on professional conduct is widely acknowledged both in biomedical research17 and in health care.18 Vaccine experts are not immune from such dynamics. These incentives may bias expert evaluations of evidence, e.g., regarding the expected benefits and harms of novel interventions.

In this section, we explore the intimate connection between expertise and trust by pointing out some problems with alternative accounts of expertise that do not rely on it. Some critiques of expert authority, including Mizrahi’s one presented above, assume a certain conceptualization of expertise as a purely epistemic notion. They presuppose, more specifically, a ‘veritist account’ of expertise, whereby an expert is someone more likely to hold true beliefs or reliable beliefs within a certain domain - either more likely than most other people, or more likely than a certain threshold.19 Other kinds of epistemic bases for expertise could be provided, for example following certain procedures of scientific inquiry or certain methodologies for inferring conclusions.

However, we need to be careful when we select the relevant epistemic conditions for expertise. The idea that expertise is defined in terms of some special access to true propositions presupposes that expert status can only be determined from a standpoint where we already know the truth.20 This is problematic for three reasons. First, such a standpoint might often be unachievable in principle (given intrinsic epistemic limits of human beings). Second, even if it were achievable, non-experts are unlikely to have access to all relevant facts, including those one would need to know in order to assess if someone making claims within that domain is an expert. Third, if non-experts had access to all relevant facts, not only might they be eligible to be experts themselves, but the notion of expertise would lose much of its practical purpose.

Indeed, it is the fact that someone we believe to be an expert makes a certain claim that provides good reason to believe that claim is true,21 and not the other way round. In this sense, attribution of expertise is a matter of epistemic trust, because it implies giving credit to someone’s epistemic status without being ourselves in the position to assess the truth, or even the epistemic credentials, of their claims. It is true that sometimes we can test experts’ opinion a posteriori, via empirical verification of their predictions. For example, we could start vaccinating children and check if the outcomes are consistent with expert predictions and suspend our attribution of expertise until we find out which experts were right. However, we don’t typically wait to attribute expertise to individuals only a posteriori. Instead, we often rely on – and trust – their judgements before finding out if they are right. This intimate relationship between expertise and epistemic trust means that expertise is not an intrinsic property of an individual or a group thereof, which can be established simply through conceptual analysis and independently of the societal dynamics and relationships in which it is created. Instead, expertise is the possession of any set of epistemic features that warrant trusting someone as an expert. Knowing the truth typically contributes to it, but it is not a necessary condition for it. Thus, a conceptual analysis of whether someone is an expert is not separable from an assessment of whether a certain relationship of epistemic trust can be justified between that individual and the relevant community.

One option is to rely on peer-recognition as a reason to trust someone’s expertise, or on the institutions that confer certificates of expertise (such as academic degrees). Some take expertise to be the condition where one can contribute to domain-specific conversations with one’s peers, after one has learned to master the language and methodologies specific to a certain domain.22 For example, experts in the JCVI and in the CDC disagreed with each other about whether the available evidence supported the claim that COVID-19 vaccines are in young adolescents’ best medical interests. Presumably, however, they shared a definition of “best medical interest” and criteria to establish such interests. On the domain-specific conversation view, the expertise of both groups is given by the fact that they can engage in this type of meaningful peer conversation, even though it might turn out that one of the two expert groups was incorrect. For example, experts who disagree could try to figure out possible respective mistakes, work together towards new positions, and so on. Individuals can engage in meaningful conversation, even in one that produces good results for society, while disagreeing about what is true. Knowledge could be built in this way and it often will. But we do consider these people ‘experts’ before and sometimes regardless of whether they get to it - for example when they investigate a new phenomenon and uncertainty is to be expected.

This might well be a good proxy, or perhaps even a necessary condition for expertise. However, it is not sufficient. At a minimum, there must also be some level of societal relevance of a certain field which warrants attribution of expertise – especially, as we suggested earlier, with expertise about matters that affect the interests of significant portions of the public. I could claim to be an expert on what I had for breakfast or someone else could claim to be an expert in alchemy, but these uses of the “expertise” terminology are quite awkward. In neither case is the knowledge in question societally relevant. Unlike vaccines’ safety and effectiveness, what one person had for breakfast is typically uninteresting to anyone in society, and alchemy has effectively no current societal relevance (though of course there can be historical or anthropological relevance). Instead, experts are presumed to be better placed to assess how to meet needs and interests of individuals and society.23 Fulfilling epistemic conditions defines expertise only to the extent that it is functional to a certain field being relevant in this sense to a relevant audience – in the case of expertise in public health, the relevant audience is the general public or significant portions of it. This suggests that expertise encompasses more aspects than merely meeting epistemic conditions (including, sometimes, possessing knowledge). It includes, in particular, the capacity to recognize relevant aspects of decision-making processes, such as how individuals’ and society’s interests will be affected – whether expertise is used to promote or infringe them (after all, expertise can also be used against societal interests); or distinctions between scientific claims and ethical-political values, so as to separate expert recommendations from political advocacy. In all such cases, we need to trust that someone’s epistemic status is functional to fulfiling these goals.

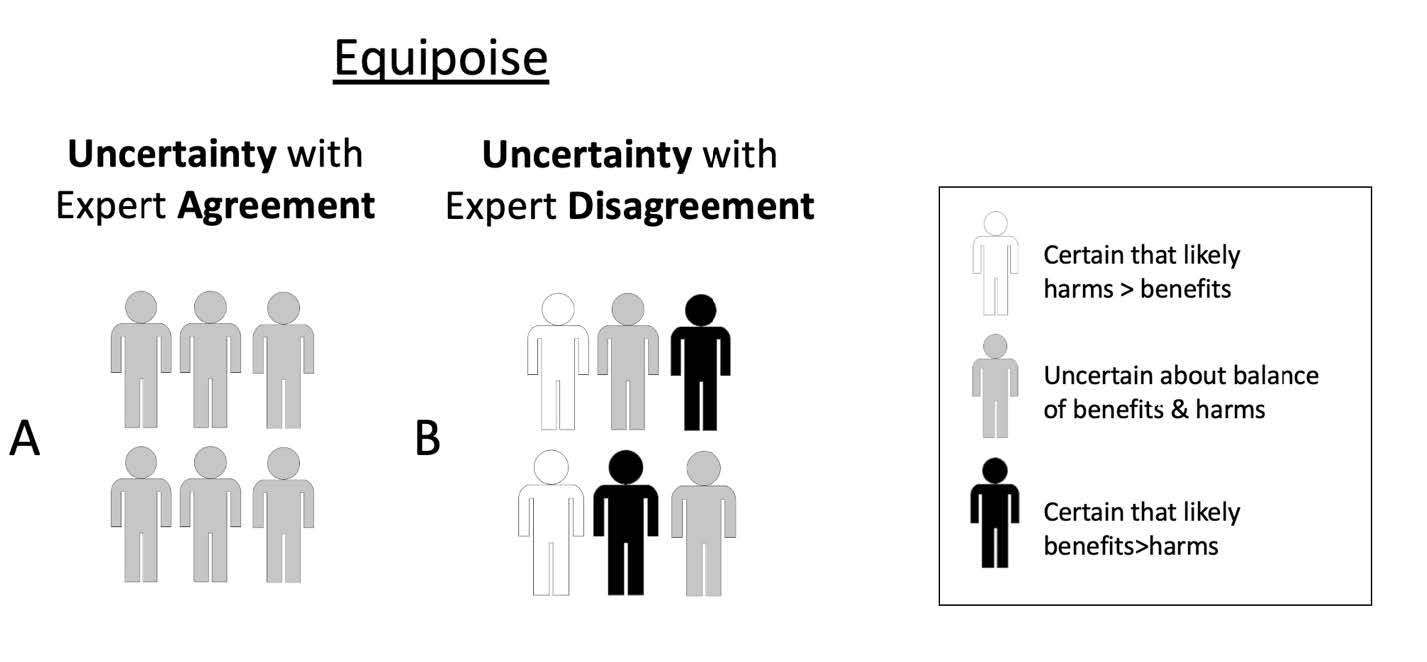

If this is correct, then it is impossible to separate expertise from being trusted as having expert authority. The epistemological problems that make it difficult, if not impossible, to determine expert authority based on access to true propositions would also make it difficult, if not impossible, to determine expertise on the same grounds. If expertise is a matter of trust, those with expert authority are those we have stronger, or sufficiently strong reasons to trust. These reasons can be of different sorts. We can trust their claims to be either true, or useful, or grounded in adequate training or qualifications, or based on certain methodologies of inquiry, or anything else that possesses societal relevance. After all, expertise is not only cognitive, but also performative: it consists in solving problems, giving advice, providing reasons for believing that something is true.24 This does not answer the question of whom we should trust in cases of expert disagreement. However, it makes expert authority independent of the answer to that question. It suggests that being right about things is neither sufficient nor necessary to give experts their authority. Topics covered by expertise, claims made by experts, and solutions experts propose need to be sufficiently relevant to a given audience. And experts with authority, that is, whom we have strong enough reasons to trust, can sometimes be uncertain (as individuals) and/or disagree with each other about current evidence (Figure 1). In both situations, experts are collectively uncertain even if some individual experts are certain. Both types of situations are consistent with (a significant degree of) so-called “equipoise,” that is, epistemic equilibrium between conflicting claims resulting in significant uncertainty – an issue we will address in section 4 below.

There is an internal and external legitimization of experts’ claims and credentials.25 Internal legitimization is the one established by criteria experts agree upon themselves in their own deliberative forums. However, as we have seen, that is not sufficient for expertise. Recognition of the relevance of certain fields matters as well. External legitimization is largely about public trust in the testimony of experts.

While it is unavoidable to ground many of our beliefs in epistemic dependence on experts, there might often be conditions that justify rejecting experts’ claims. These include, for instance, evidence that experts are refusing to acknowledge mistakes, or that they are subject to conflicts of interest including social pressure from others in the same field.26 Both can result in refusal to acknowledge uncertainty.

We often hear of a crisis of expertise, that is, decreasing levels of trust in experts.27 However, it has been noted that overall levels of trust in experts do not drop, but simply shift among different domains of expertise. If we stop trusting certain experts, we will simply move that trust on to others.28 In the context of public health, pre-pandemic PEW Research suggested that public confidence in science and medicine in the US had been relatively stable, at about 40%, over the past three decades.29 During the pandemic it dropped below 30%.30 Among other factors, perceived overconfidence in the medical field can be detrimental to trust.31 Other fields, such as economics, have long accepted that overconfidence can undermine trust in experts and acknowledgment of limitations of experts’ knowledge can promote trust.32

A lack of trust in public health experts can be particularly serious insofar as public health experts need to enjoy what Matthew Bennett calls “recommendation trust.” In other words, trust that “I should do something because they have told me I should.”33 Recommendation trust requires that recommendations are followed not out of fear of the consequences of disobedience, but because trust by itself provides enough reasons to follow them. In a liberal democracy, fear of consequences can be antithetical to trust as it can hinder contestation of experts. Freedom to contest expert claims may enhance trust by demanding transparent justification of recommendations. For example, vaccine critical activists may, by challenging mainstream narratives about the benefits of vaccines, create the demand for constantly improving the justifications for vaccination policies based on expert advice.34

As we discuss below, debates in vaccine research and policy, particularly in the case of COVID-19 vaccines, exemplify issues around expertise and trust that we have presented so far. Questions about expert disagreement have arguably received greater attention in the context of research ethics,35 especially within discussion of “’equipoise” (see below). However, we suggest that policy surrounding COVID-19 vaccine research has failed to place enough weight on expert disagreement – for instance regarding the need to collect additional data on benefits and harms in specific groups before recommending or mandating vaccines (for relevant groups).

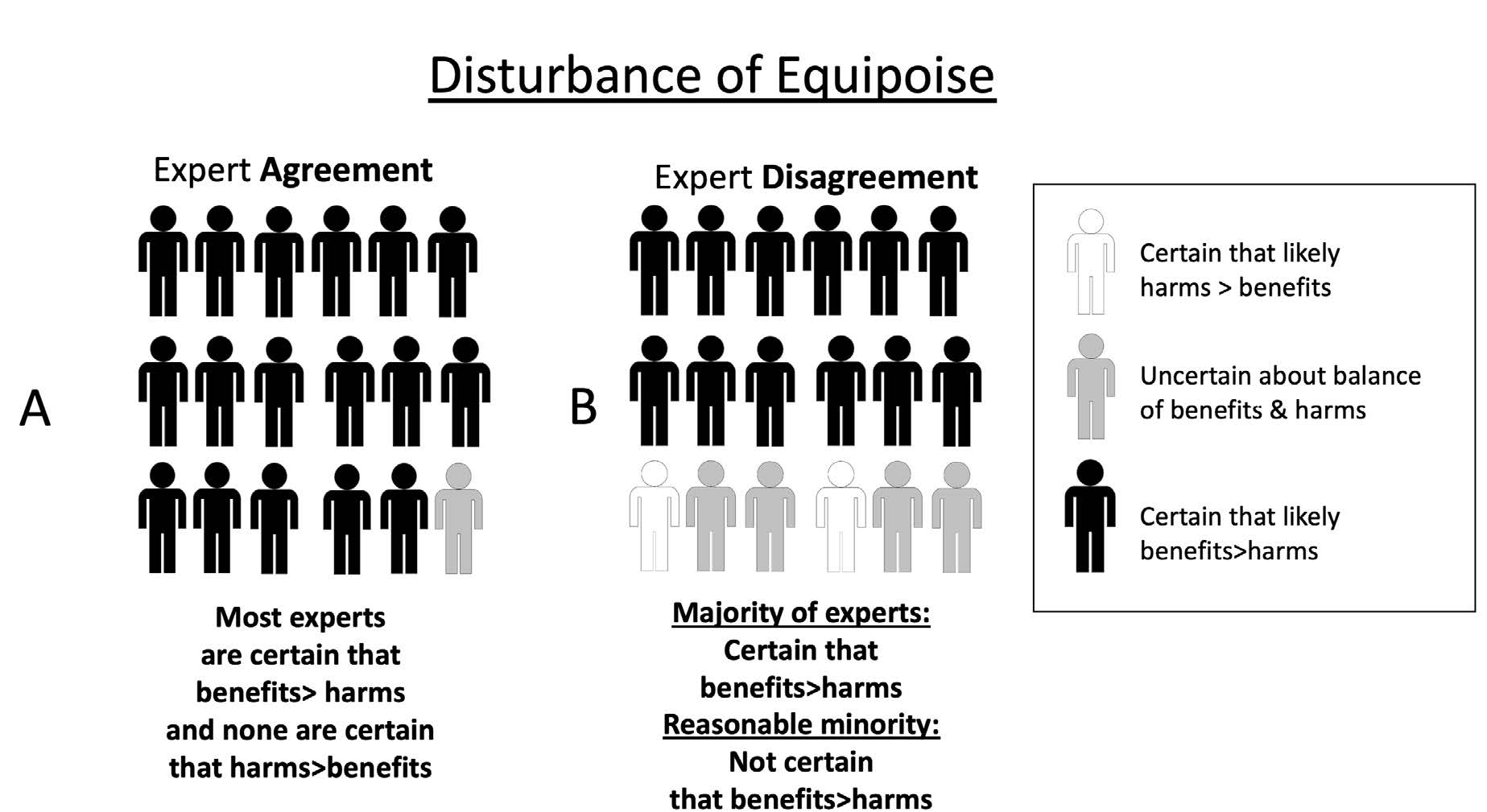

In research ethics, questions about the degree of expert consensus have played a key role in debates about when it would be ethically acceptable to begin studies (especially randomized controlled trials) and/or when to terminate a study on the basis of data showing a clear net benefit or net harm associated with an experimental intervention. Initial theories of equipoise – the term commonly used in the field of research ethics – focused on the idea that an individual clinician would consider it ethical to enroll her patient in a clinical trial if the clinician was genuinely uncertain about whether an experimental treatment was better than standard care.36 Later theories focus on equipoise of expert community opinions: a research study is considered ethical if, in the collective opinion of a community of well-informed experts, there is a sufficient degree of uncertainty and therefore equipoise between the expected balance of benefits and harms in the intervention and control arms in the study. In other words, the balance of expected benefits and harms of a new, experimental intervention versus an existing one that is used in the control arm is considered uncertain, given the disagreement among experts, in the given population under study (and for a potential participant as a representative of the study population).37 As illustrated in Figure 1, situations of community equipoise might sometimes occur when there is expert consensus (about uncertainty). They can also occur when there is expert disagreement, because some experts are certain that an experimental intervention is superior to an alternative (whether placebo or standard of care), some are uncertain, and some are certain that the experimental intervention is inferior to the alternative. Similarly, as shown in Figure 2, situations where equipoise is disturbed (i.e., where most experts are certain) can occur with widespread expert agreement (Figure 2A) or where there is some degree of expert disagreement (Figure 2B).

This raises questions about ethically acceptable approaches to situations of expert disagreement. A simple approach might be “majority rules”: once a majority of experts believe that evidence from research is sufficient to be confident that the expected benefits of an intervention outweigh its risks in a particular context, then research should stop, and the intervention should be implemented in policy. However, the history of science includes many examples illustrating that a minority of experts initially considered mistaken by the majority may sometimes later be proven right.38 Thus, a “majority rules” approach to equipoise among experts would sometimes rule out ethically acceptable research where most experts mistakenly considered the research question settled (i.e., these experts were, taken together, certain that either the intervention or the control was overall superior in the population in question) but where a minority of experts correctly considered that there is still significant uncertainty about the benefit-risk ratio of an intervention.

One way of accounting for disagreement in the context of judgements about community equipoise is to specify that equipoise persists where “at least a reasonable minority of experts […] would recommend that intervention for that individual”39– i.e., the situation in Figure 2B. Of course, there might be different views about what a “reasonable” minority might be (and appropriate thresholds might vary in the context of different health problems, interventions, populations, etc.). However, this “reasonable minority” view at least provides a starting point to explain why research might sometimes be ethically acceptable even when a majority of experts consider that it would be unacceptable.

In the case of the rollout of mRNA COVID-19 vaccines, it was arguably ethically acceptable to collect additional data on the risks of myocarditis in young people because of the concerns of a minority of experts, even if a majority of experts considered such vaccines sufficiently safe for use in young people.40 Continuing to collect additional data might even be ethically required (rather than merely acceptable). This would be the case, for example, if there is reasonable disagreement that an identifiable group faces risks that outweigh benefits even if (there is widespread agreement that) benefits outweigh risks in the overall population to which the group belongs. Similarly, once COVID-19 vaccines were shown to be effective, it was arguably at least ethically acceptable to continue to collect data among recipients of placebos in initial trials, even if most experts thought that there was an ethical imperative to permit low risk placebo arm participants to access the vaccines despite not being in an otherwise eligible population group.41 However, it is important that this consideration is balanced with considerations around duties of care.42

In some cases, a minority of experts who remain uncertain (in equipoise) about the balance of benefits and harms of the vaccine in the population in question face a dilemma. If most experts (who believe the vaccine offers a net benefit over placebo) or members of the public become aware of the minority view, this minority group may face negative consequences as a result of holding such views.43 This might be especially likely to occur where (i) debates about vaccination in general become highly polarized, (ii) the vaccine in question is highly politicized, and/or (iii) the majority group seeks to ostracize and/or silence the minority group of experts. To resolve this dilemma, experts in a minority group may either falsify their preferences (i.e., publicly agree with the majority group while continuing to have private doubts about the majority position),44 or publicly express their disagreement and accept any negative consequences.

Arguably, these patterns were observed during debates about COVID-19 vaccines. In some cases, they led to experts silencing themselves, being silenced by others, or being silenced in other ways.45 For example, the authorization of vaccine boosters for young healthy adults in the United States in the absence of efforts to collect more data resulted in the resignation of prominent vaccine regulatory experts at the US Food and Drug Administration.46 This occurred in the context of wider debates about the merits of boosters in low-risk populations.47 Further, differences in expert opinion about COVID-19 vaccines between experts in different countries arguably revealed more uncertainty about a particular intervention at the global level than experts in one country might have been willing to admit.48

The authorization of COVID-19 vaccines despite these debates conveys the idea that equipoise had been disturbed, i.e., that there is sufficient certainty among experts. By implication, further research would be considered unethical. Yet, a minority of experts considered that it was still uncertain that COVID-19 vaccines (in standard or booster schedules) offered net benefits for low-risk groups such as healthy adolescents, children, or those with immunity after previous infection.

The goal of much vaccine research is to produce data to support new vaccines or refine the use of existing vaccines to promote net public health benefits. This goal raises questions about how much evidence is required before it would be appropriate to begin (or continue/discontinue) the roll out of a vaccine in public health practice. These questions arguably mirror those in research ethics, since as soon as vaccine trial data are considered to disturb equipoise in favor of the vaccine, at least some experts may recommend approval of the vaccine for public use (cf. Figure 2B).

If one takes a “majority rules” view of consensus among experts, answers to such questions are straightforward. Once a reasonable majority of experts agree that the vaccine in question offers a superior balance of benefits over harms in a given population, the trial should be stopped, and the vaccine can be recommended accordingly. This is where expertise on vaccination science may overlap with expertise regarding public health policy. Within public health, the “majority rules” view about the ethical acceptability of implementing an intervention (supported by at least some research data) faces similar challenges to those in research ethics – especially where majority consensus conceals significant disagreement (Figure 2B). In public health practice, there are multiple potential sources of reasonable disagreement among experts, especially when interventions are novel, studies are few, or there are other epistemic limitations regarding the generalizability of existing results.

Debates about public health policy can be even more polarized than debates about research. Where at least some relevant research has been completed, some experts (sometimes most experts) may consider that the (net) benefits of the vaccine (or another intervention) have been proven beyond reasonable doubt. In such cases, it might be tempting to consider those who disagree as unreasonable or irrational. However, there will often be sources of reasonable epistemic and ethical disagreement.

First, experts might disagree about the extent to which one can have epistemic confidence based on existing (research) data that the intervention is superior to the control. This might be based on reasonable disagreement about the scientific design and conduct of relevant studies.

Second, disagreements about how to interpret existing data might sometimes be ethical disagreements about how much epistemic certainty should be required before implementing a new intervention in the real world. For example, “conservative” views would give more weight to the potential harms of low probability negative outcomes, such as vaccine side-effects that are not ruled out with high certainty by early data. “Early adopter” views would give more weight to the foregone benefits if implementation were slowed while conducting further research to collect more data.49 Both views are reasonable in different circumstances, but it is important to be clear about the extent to which different opinions reflect disagreement about science (or empirical and epistemic questions) versus disagreement about ethical and political values. Otherwise, the reasons for certain policies and the existence of reasonable debate may not be transparent to the public, which may undermine trust in public health experts. Experts’ policy recommendations are therefore always judgments about what level of evidence or knowledge is enough. This is a value judgment on the risks we are, or should be, prepared to take given relevant uncertainty. Reasonable disagreement about where the threshold for “enough” lies can produce reasonable public health expert disagreement. This shows how public health expertise, and public health more generally, can never be only about empirical matters. Values underpinning expert recommendations might ideally be unpacked and discussed, but these values are not something on which vaccine experts have expertise. This is one reason why “follow the science” was never sufficient to justify pandemic policy, although it might have sounded reassuring to many and therefore there might have been good reasons to include it in public health messaging.

Third, experts might disagree about the degree of generalizability of knowledge generated by research to real world settings (the efficacy-effectiveness gap). In practice, then, there will at least sometimes (and perhaps often) be scope for reasonable disagreement among experts about whether the data to hand are sufficient for the ethically acceptable use of the intervention in specific real-world populations. Taking this disagreement seriously could not only contribute to trust in experts, but also potentially maximize the benefits of vaccine research. Failures to heed the views of the reasonable minority of experts that were uncertain about the net medical benefits of COVID-19 vaccines in specific groups, such as the JCVI, arguably foreclosed opportunities to collect more data, including data on alternative dosing strategies for mRNA vaccines50 and long-term outcomes from myocarditis.51 Consequently, it resulted in the authorization of COVID-19 vaccines even for groups where there may have been net expected harm.52

Being transparent about these types of disagreements may allow policymakers to authorize the use of vaccines and strongly recommend them for high-risk groups while (in the face of uncertainty) refraining from strong recommendations or mandating vaccination in low-risk groups.

New vaccines might be authorized and recommended at the population level based on a majority expert consensus regarding net benefit, but where a reasonable minority of experts disagree (Figure 2B). If the concerns of the minority turn out to be well founded but ignored, this can lead to preventable harms unforeseen by the majority. For example, in 2015-2017, a vaccine for dengue was approved for use and implemented in some countries despite at least one group of experts having expressed concerns about potential risks.53 When the harm foreseen by this group of experts eventuated, public trust in vaccination more generally plummeted in relevant communities. This increased mistrust was considered by some authors “a threat to pandemic preparedness.”54 Below, we examine how trust in experts could be undermined by decisions about vaccine policy, especially when they concern a novel vaccine during a pandemic.

The COVID-19 pandemic has been characterized by some lack of clarity surrounding public health agencies’ decision-making processes, recommendations, and policies, including on social media.55

In implementing vaccine policy, policymakers have limited public trust with which to barter. Moreover, restrictive policies are likely to undermine trust,56 as the fall in confidence in science during a pandemic characterized by restrictive response measures might suggest. Indeed, COVID-19 vaccines were also mandated for low-risk groups in some settings, such as many North American universities.57 Vaccine mandates might be effective at increasing vaccine uptake in the short term. However, even when they are, if they produce a decrease in trust in public health and in vaccine experts, they can undermine vaccine uptake in the long term.58 This might ultimately outweigh the potential benefit rendered from an increase in vaccine uptake.59

Ethical analysis of COVID-19 mandates that took into consideration the benefits of a rise in vaccination rates against potential risks, such a loss of healthcare workforce and trust in vaccines due to vaccine mandates, was largely missing from public debate before their implementation.60 Meanwhile, COVID-19 vaccine mandates were sometimes sold as a “band aid” solution to systematic public health challenges, such as insufficient hospital capacity. The ongoing evolution of COVID-19 vaccine mandates and policy is an interesting case in understanding how fast-paced, “well-intentioned” policymaking during a pandemic without thorough and transparent ethical analysis can, ultimately, backfire in terms of public trust.61

Infant and child vaccination has not always been easily accepted and implemented across society. Primary drivers of modern parental hesitancy towards child vaccination include religious reasons, personal beliefs or philosophical reasons, and safety concerns.62 Some communities of parents have always been, and probably will continue to remain, hesitant towards some or all vaccines for their children.63

The COVID-19 pandemic exacerbated mistrust in vaccines.64 Routine childhood vaccination rates have declined compared to pre-pandemic levels.65 Regarding COVID-19 vaccines, as of early 2023 only 10% of parents in the US and Canada have chosen to vaccinate their children under 5 years old. In both countries, less than 50% of children aged 5-11 have received 2 doses.66 In the UK, 9 out 10 children aged 5-11 are not vaccinated against COVID-19.67 All this happens while policy and presumably the majority view among experts is that such vaccines are medically beneficial for children. This situation seems to suggest widespread lack of trust in vaccine and public health experts and/or uncertainty about net benefits in this group. Given our arguments above, there is the risk that relevant experts could lose some of their authority in the eyes of most parents.

The process of rebuilding or reinforcing trust should be sensitive to many legitimate concerns surrounding vaccine development and appropriate safety data, such as concerns related to Pfizer’s failure to follow FDA’s instructions to conduct further studies into mRNA vaccine myocarditis.68 As argued above, trustworthiness also involves being transparent about uncertainty and disagreement. It requires acknowledging that the minority view of experts who recommended against using COVID-19 vaccines for certain low risk groups might, after all, be right, or at least worth listening to.

Decision-making surrounding vaccine policy has the potential to affect public trust in vaccines more generally. This might also have broader implications for trust in scientific expertise, institutions, and public health agencies. COVID-19 vaccine hesitancy is impacted by many factors that usually influence vaccine hesitancy – confidence, complacency, convenience, communication, and context.69 Hesitancy that arose during the COVID-19 pandemic was particularly unique in that the timelines for vaccine development were especially rapid, levels of global public scrutiny were high, and, in some countries, punitive measures for unvaccinated people were severe.70

Despite the celebrated pace of developing and rolling out COVID-19 vaccines in one year, the “warp speed” of the operation has also been met with expert and public concern surrounding “how fast” is “too fast.”71 Children were left out of initial safety trials for COVID-19 vaccines. As a result, COVID-19 vaccine rollout in children began around one year later than in adults. As we saw, whether mRNA COVID-19 vaccines offered net benefit to children was, at the time, not a consensus among the scientific community, or even among public health authorities. At least some concerns surrounding the safety of the vaccine for certain pediatric populations, like adolescent boys, emerged after cases of myocarditis post-vaccination.72

Given these uncertainties, it is unsurprising that trust in vaccine and public health experts decreased, affecting childhood vaccination generally – even impacting vaccines that previously enjoyed quite widespread parental support.

Trust is cornerstone to the success of public health interventions like vaccines – particularly infant and child vaccination. Decreased levels of trust in vaccines, experts, and public health agencies have already led to decreased global levels of vaccine uptake, particularly among children.73 Whom we take to be vaccine experts can be affected by the way expert disagreement and uncertainty are acknowledged both by experts themselves and by public health authorities. This is because attributions of expertise are acts of trust in epistemic but also in moral features of the person we, as individuals and as society, decide to consider experts. The epistemic and the moral dimensions of trust are inseparable from each other because satisfying epistemic conditions requires committing to some ethical principles. These include humility, honesty, and transparency regarding the limitations of one’s knowledge, which would prevent epistemic failures such as overconfidence. To the extent that it contributes to preserving public trust, transparency about expert disagreement and uncertainty is an essential aspect of what it means to be an expert. Indeed, it can be as important as being confident in what one, as an expert, believes to be true.

JCVI (2021). ↑

Iacobucci (2021), CDC (2021). ↑

See e.g. Fisher et al. (2021). It is worth noting that this line of argument can be questioned, see e.g. Giubilini (2021). ↑

McLeod (2021). ↑

Jones (1996), McLeod (2021). ↑

ACE (2000). ↑

O’Neill (2020). ↑

Larson et al. (2018). ↑

Kitcher (2001). ↑

Kitcher (2011): 35, 148. ↑

Ibidem: 40. ↑

IEA (2007). ↑

Mizrahi (2013): 64. ↑

Watson (2021): 72–76. ↑

Goldman (2001). ↑

Ibidem: 98. ↑

Saver (2012). ↑

Wiersma et al. (2018). ↑

Goldman (2018). ↑

Hardwig (1985). ↑

Ibidem. ↑

Collins (2014), Collins and Evans (2007). ↑

Kitcher (2011). ↑

Watson (2021): 170. ↑

Moore (2017). ↑

Hardwig (1985): 342. ↑

Watson (2021). ↑

Ibidem: 14. ↑

Funk and Kennedy (2020). ↑

Kennedy et al. (2022). ↑

London (2021). ↑

Angner (2006). ↑

Bennet (2020): 248 ↑

Moore (2017): 55–57. ↑

Smith (2021). ↑

Fried (1974). ↑

London (2020) ↑

Kitcher (1995). ↑

London (2020). ↑

Bardosh et al. (2022). ↑

Rid et al. (2021). ↑

We thank an anonymous reviewer for this observation. ↑

de Melo Martin and Intemann (2013). ↑

Kuran (1997). ↑

Bardosh et al. (2022). ↑

Lovelace and Tirrell (2022). ↑

Krause et al. (2021). ↑

Bardosh et al. (2022). ↑

Haines and Donald (1998). ↑

Prasad (2023). ↑

Kracalik et al. (2022). ↑

Bardosh et al. (2022). ↑

Jamrozik et al. (2021). ↑

Larson et al. (2019). ↑

Reveilhac (2022). ↑

Nature 2018. ↑

Bardosh et al. (2022). ↑

Abrevaya and Mulligan (2011). ↑

Bardosh et al. (2022). ↑

Gur-Arie et al. (2021). ↑

Holton (2021). ↑

McKee and Bohannon (2016). ↑

Bernstein et al. (2019). ↑

He et al. (2022). ↑

Balch (2022), Bramer et al. (2020), Nolen (2022). ↑

AAP (2023), Government of Canada (2023). ↑

ONS (2023). ↑

Prasad (2023). ↑

Razai et al. (2021). ↑

Liebermann and Kaufman (2021). ↑

Van Norman (2020). ↑

Vogel and Couzin-Frankel (2021). ↑

Larson et al. (2022). ↑

Funding: This research was funded in whole, or in part, by the Wellcome Trust [203132] and [221719]. The Trust and Confidence research programme at the Pandemic Sciences Institute at the University of Oxford is supported by an award from the Moh Foundation.

Conflict of Interests: The authors declare no conflict of interest.

License: This is an open access article under the terms of the Creative Commons Attribution License, which permits use, distribution and reproduction in any medium, provided the original work is properly cited.

AAP (American Academy of Pediatrics) (2023), “Children and COVID-19 Vaccination Trends,” URL = https://www.aap.org/en/pages/2019-novel-coronavirus-covid-19-infections/ [Accessed 12.01.2023].

ACE (American College of Epidemiology) (2000), “Ethics Guidelines,” URL = https://www.acepidemiology.org/ethics-guidelines [Accessed 12.01.2023].

Abrevaya J., Mulligan K. (2011), “Effectiveness of State-Level Vaccination Mandates: Evidence from the Varicella Vaccine,” Journal of Health Economics 30 (5): 966–976.

Angner E. (2006), “Economists as Experts: Overconfidence in Theory and Practice,” Journal of Economic Methodology 13 (1): 1–24.

Bardosh K., de Figueiredo A., Gur-Arie R., Jamrozik E., Doidge J., Lemmens T., Keshavjee S., Graham J.E., Baral S. (2022), “The Unintended Consequences of COVID-19 Vaccine Policy: Why Mandates, Passports and Restrictions May Cause More Harm Than Good,” BMJ Global Health 7 (5): e008684.

Balch B. (2022), “How Distrust of Childhood Vaccines Could Lead to More Breakouts of Preventable Diseases,” URL = https://www.aamc.org/news-insights/how-distrust-childhood-vaccines-could-lead-more-breakouts-preventable-diseases [Accessed 12.01.2023].

Bennett M. (2020), “Should I do as I’m told? Trust, Experts, and COVID-19,” Kennedy Institute of Ethics Journal 30 (3): 243–263.

Bernstein J., Holroyd T.A., Atwell J.E., Ali J., Limaye R.J. (2019), “Rockland County’s Proposed Ban Against Unvaccinated Minors: Balancing Disease Control, Trust, and Liberty,” Vaccine 37 (30): 3933–3935.

Bramer C.A., Kimmins L.M., Swanson R., Kuo J., Vranesich P., Jacques-Carroll L.A., Shen A.K. (2020), “Decline in Child Vaccination Coverage During the COVID-19 Pandemic – Michigan Care Improvement Registry, May 2016–May 2020,” American Journal of Transplantation 20 (7): 1930–1931.

CDC (Centers of Diasease Control and Prevention) (2021a), “CDC Reccomends Pediatric COVID-19 Vaccine for Children 5 to 11 Years,” URL = https://www.cdc.gov/media/releases/2021/s1102-PediatricCOVID-19Vaccine.html [Accessed 16.07.2023].

CDC (Centers of Diasease Control and Prevention) (2021b), “CDC Director Statement on Pfizer’s Use of COVID-19 Vaccine in Adolescents Age 12 and Older,” URL = https://www.cdc.gov/media/releases/2021/s0512-advisory-committee-signing.html [Accessed 16.07.2023].

CDC (Centers of Diasease Control and Prevention) (2023), “Child and Adolescent Immunization Schedule by Age,” https://www.cdc.gov/vaccines/schedules/hcp/imz/child-adolescent.html [Accessed 16.07.2023].

Collins H., Evans R. (2007), Rethinking Expertise, University of Chicago Press, Chicago.

Collins, H. (2014), Are We All Scientific Experts Now?, Polity Press, Cambridge.

de Melo-Martín I., Intemann K. (2013), “Scientific Dissent and Public Policy,” EMBO Reports 14: 231–235.

Fisher L., Roberts L., Turner C. (2021), “Get Your Children Vaccinated Against Covid, Parents are Told,” URL = https://www.telegraph.co.uk/news/2021/09/13/chief-medical-officers-approve-vaccines-teenagers/ [Accessed 9.01.2023].

Fried C. (1974), Medical Experimentation: Personal Integrity and Social Policy, North Holland Publishing, Amsterdam.

Funk C., Kennedy B. (2020), “Public Confidence in Scientists has Remained Stable for Decades,” URL = https://www.pewresearch.org/fact-tank/2020/08/27/public-confidence-in-scientists-has-remained-stable-for-decades/ [Accessed 9.01.2023].

Giubilini A. (2021), “The Double Ethical Mistake of Vaccinating Children against COVID-19,” URL = https://blog.practicalethics.ox.ac.uk/2021/09/the-double-ethical-mistake-of-vaccinating-children-against-covid-19/ [Accessed 9.01.2023].

Giubilini A., Gupta S., Heneghan C. (2021), “A Focused Protection Vaccination Strategy: Why We Should not Target Children with COVID-19 Vaccination Policies,” Journal of Medical Ethics 47 (8): 565–566.

Goldman A.I. (2001), “Experts: Which Ones Should You Trust?,” Philosophy and Phenomenological Research 63 (1): 85–110.

Goldman A.I. (2018), “Expertise,” Topoi 37 (1): 3–10.

Government of Canada (2023), “Covid-19 Vaccination in Canada,” URL = https://health-infobase.canada.ca/covid-19/vaccination-coverage/ [Accessed 12.01.2023].

Gur-Arie R., Jamrozik E., Kingori P. (2021), “No Jab, No Job? Ethical Issues in Mandatory COVID-19 Vaccination of Healthcare Personnel,” BMJ Global Health 6 (2): e004877.

Haines A., Donald A. (1998), “Making Better Use of Research Funding,” British Medical Journal 317 (7150): 72–75.

Hardwig J. (1985), “Epistemic Dependence,” The Journal of Philosophy 82 (7): 335–349.

He K., Mack W.J., Neely M., Lewis L., Anand V. (2022), “Parental Perspectives on Immunizations: Impact of the COVID-19 Pandemic on Childhood Vaccine Hesitancy,” Journal of Community Health 47 (1): 39–52.

Holton W. (2021), “The Danger of Well-Intentioned Deceptions from Public Health Officials,” The Washington Post, URL = https://www.washingtonpost.com/outlook/2021/09/19/danger-well-intentioned-deceptions-public-health-officials/ [Accessed 12.01.2023].

IEA (International Epidemiological Association) (2007), Good Epidemiological Practice, URL = https://www.yumpu.com/en/document/read/19409945/iea-guidelines-for-proper-conduct-of-epidemiological-research-hrsa [Accessed 12.01.2023].

Iacobucci G. (2021), “Covid-19: JCVI Opts not to Recommend Universal Vaccination of 12–15 Year Olds,” British Medical Journal 374: n2180.

Jamrozik E., Heriot G., Bull S., Parker M., Disease O.J.H.G.I. (2021), “Vaccine-Enhanced Disease: Case Studies and Ethical Implications for Research and Public Health,” Wellcome Open Research 6: 154.

JCVI (Joint Committee on Vaccination and Immunisation) (2021), “JCVI Issues Updated Advice on COVID-19 Vaccination of Children Aged 12 to 15,” URL = https://www.gov.uk/government/news/jcvi-issues-updated-advice-on-covid-19-vaccination-of-children-aged-12-to-15 [Accessed 9.01.2023].

Johnson R.H., Blair J.A. (1983), Logical Self-Defense, McGraw Hill, New York.

Jones K. (1996), “Trust as an Affective Attitude,” Ethics 107 (1): 4–25.

Kennedy B., Tyson A., Funk C. (2022), “Americans’ Trust in Scientists, Other Groups Declines,” URL = https://www.pewresearch.org/science/2022/02/15/americans-trust-in-scientists-other-groups-declines/ [Accessed 9.01.2023].

Kitcher Ph. (1995), The Advancement of Science: Science Without Legend, Objectivity Without Illusions, Oxford University Press, Oxford.

Kitcher Ph. (2001), Science, Truth, and Democracy, Oxford University Press, Oxford.

Kitcher Ph. (2011), Science in a Democratic Society, Prometheus Books, New York.

Kloc M., Ghobrial R.M., Kuchar E., Lewicki S., Kubiak J.Z. (2020), “Development of Child Immunity in the Context of COVID-19 Pandemic,” Clinical Immunology 217: 108510.

Kracalik I., Oster M.E., Broder K.R., Cortese M.M., Glover M., Shields K., Creech C.B., Romanson B., Novosad Sh., Walter E.B. et al. (2022), “Outcomes at Least 90 Days Since Onset of Myocarditis After Mrna COVID-19 Vaccination in Adolescents and Young Adults in The USA: A Follow-Up Surveillance Study,” The Lancet Child & Adolescent Health 6 (11): 788–779.

Krause P.R., Fleming T.R., Peto R., Longini I.M., Figueroa J.P., Sterne J.A.C., Cravioto A., Rees H., Higgins J.P.T., Boutron I. et al. (2021), “Considerations in Boosting COVID-19 Vaccine Immune Responses,” The Lancet 398 (10308): 1377–1380.

Kuran T. (1997), Private Truths, Public Lies, Harvard University Press, Cambridge, Mass.

Larson H.J., Clarke R.M., Jarrett C., Eckersberger E., Levine Z., Schulz W.S., Paterson P. (2018), “Measuring Trust in Vaccination: A Systematic Review,” Human Vaccines & Immunotherapeutics 14 (7): 1599–1609.

Larson H.J., Hartigan-Go K., de Figueiredo A. (2019), “Vaccine Confidence Plummets in the Philippines Following Dengue Vaccine Scare: Why it Matters to Pandemic Preparedness,” Human Vaccines & Immunotherapeutics 15 (3): 625–627.

Larson H.J., Gakidou E., Murray Ch.J. (2022), “The Vaccine-Hesitant Moment,” New England Journal of Medicine 387 (1): 58–65.

Liebermann O., Kaufman A. (2021), “Pentagon Outlines Punishments for Civilian Employees if They Fail to Get Vaccinated,” URL = https://www.cnn.com/2021/10/19/politics/pentagon-civilian-vaccine-mandate-punishments/index.html [Accessed 4.05.2023].

London A.J. (2020), “Equipoise: Integrating Social Value and Equal Respect in Research with Humans,” [in:] The Oxford Handbook of Research Ethics, A.S. Iltis, D. MacKay (eds.), Oxford University Press, New York: 1–24.

London A.J. (2021), “Self-Defeating Codes of Medical Ethics and How to Fix Them: Failures in COVID-19 Response and Beyond,” The American Journal of Bioethics 21 (1): 4–13.

Lovelace B., Tirrell M. (2022), “Two Senior FDA Vaccine Regulators are Stepping Down,” URL = https://www.cnbc.com/2021/08/31/two-senior-fda-vaccine-regulators-are-stepping-down.html [Accessed 9.01.2023].

McKee C., Bohannon K. (2016), “Exploring the Reasons Behind Parental Refusal of Vaccines,” The Journal of Pediatric Pharmacology and Therapeutics 21 (2): 104–109.

McLeod C. (2021), “Trust,” [in:] The Stanford Encyclopedia of Philosophy, E.N. Zalta (ed.), URL = https://plato.stanford.edu/archives/fall2021/entries/trust/ [Accessed 12.01.2023].

Mizrahi M. (2013), “Why Arguments from Expert Opinion are Weak Arguments,” Informal Logic 33 (1): 57–79.

Moore A. (2017), Critical Elitism: Deliberation, Democracy, and the Problem of Expertise, Cambridge University Press, Cambridge.

Nature (Editors) (2018), “Laws are not the Only Way to Boost Immunization,” Nature 553: 249–250.

Nolen S. (2022), “Sharp Drop in Childhood Vaccination Threatens Millions of Lives,” The New York Times, URL = https://www.nytimes.com/2022/07/14/health/childhood-vaccination-rates-decline.html [Accessed 12.01.2023].

ONS (Office for National Statistics) (2023), “Coronoavirus (COVID-19) Latest Insights: Vaccines,” URL = https://www.ons.gov.uk/peoplepopulationandcommunity/healthandsocialcare/

conditionsanddiseases/articles/coronaviruscovid19latestinsights/vaccines

#:~:text=Children%20aged%205%20to%2011,were%20recommended%20for%20other%20groups [Accessed 12.01.2023].

O’Neill O. (2020), “Trust and Accountability in a Digital Age,” Philosophy 95 (1): 3–17.

Ollove M. (2022), “Most States are Wary of Mandating COVID Shots for Kids,” URL = https://www.pewtrusts.org/en/research-and-analysis/blogs/stateline/2022/01/07/most-states-are-wary-of-mandating-covid-shots-for-kids [Accessed 12.01.2023].

Opel D.J., Diekema D.S., Ross L.F. (2021), “Should we Mandate a COVID-19 Vaccine for Children?,” JAMA Pediatrics 175 (2): 125–126.

Prasad V. (2023), “COVID-19 Vaccines: History of the Pandemic’s Great Scientific Success & Flawed Policy Implementation,” Monash Bioethics Review (Forthcoming).

Razai M.S., Oakeshott P., Esmail A., Wiysonge C.S., Viswanath K., Mills M.C. (2021), “COVID-19 Vaccine Hesitancy: The Five Cs to Tackle Behavioural and Sociodemographic Factors,” Journal of the Royal Society of Medicine 114 (6): 295–298.

Reveilhac M. (2022), “The Deployment of Social Media by Political Authorities and Health Experts to Enhance Public Information During the COVID-19 Pandemic,” SSM-Population Health 19: 101165.

Rid A., Lipsitch M., Miller F.G. (2021), “The Ethics of Continuing Placebo in SARS-CoV-2 Vaccine Trials,” Journal of the American Medical Association 325 (3): 219–220.

Saver R.S. (2012), “Is it Really all about the Money? Reconsidering Non-Financial Interests in Medical Research,” Journal of Law, Medicine & Ethics 40 (3): 467–481.

Smith R. (2021), “Time to Assume That Health Research is Fraudulent until Proven Otherwise?,” BMJ Opinion, URL = https://blogs.bmj.com/bmj/2021/07/05/time-to-assume-that-health-research-is-fraudulent-until-proved-otherwise/ [Accessed 9.01.2023].

Turner S. (2001), “What is the Problem with Experts?,” Social Studies of Science 31 (1): 123–149.

Van Norman G.A. (2020), “’Warp Speed’ Operations in the COVID-19 Pandemic: Moving Too Quickly?,” Basic to Translational Science 5 (7): 730–734.

Vogel G., Couzin-Frankel J. (2021), “Israel Reports Link Between Rare Cases of Heart Inflammation and COVID-19 Vaccination in Young Men,” URL = https://www.science.org/content/article/israel-reports-link-between-rare-cases-heart-inflammation-and-covid-19-vaccination#:~:text=In%20a%20report%20submitted%20today,which%20is%20typical%20for%20myocarditis [Accessed 16.07.2023].

Watson J.C. (2021), Expertise. A Philosophical Introduction, Bloomsbury, London.

Wiersma M., Kerridge I., Lipworth W. (2018), “Dangers of Neglecting Non-Financial Conflicts of Interest in Health and Medicine,” Journal of Medical Ethics 44 (5): 319–322.